Large Language Models (LLMs) like GPT-4 and Gemini have become essential to AI transformation, driving innovation across industries. They generate human-like text, process complex queries, and assist in everything from customer support to enterprise automation. But despite their strengths, they have a major flaw—they forget.

LLMs process information within a fixed context window. Once that limit is exceeded, older inputs get pushed out, leading to lost context, inconsistent responses, and repetitive queries. They also don’t update their knowledge unless they go through expensive and time-consuming retraining, meaning they can quickly become outdated in fast-moving industries like finance, healthcare, and law. For businesses relying on AI to streamline operations and provide accurate insights, these gaps can be a deal breaker. That’s why the next step in AI transformation isn’t just smarter models—it’s making them better at retrieving, remembering, and applying real-time knowledge. This is where Retrieval-Augmented Generation (RAG) comes in.

Why Large Language Models Forget?

LLMs process text differently from human memory. They do not retain past interactions, recall previous conversations, or learn incrementally. Instead, they rely on statistical probabilities to generate responses, making them powerful but flawed in long-term knowledge retention, real-time accuracy, and adaptability.

Limited Context Window

LLMs process information in tokens, and each model has a predefined limit on how many tokens it can handle at once. When that limit is reached, older inputs are removed from memory, which leads to inconsistencies in long conversations. This makes it difficult for AI to track ongoing discussions, remember important details, or provide coherent responses in multi-step interactions. In industries such as legal, healthcare, and finance, where accurate recall of past data is critical, this limitation creates significant challenges.

Lack of Persistent Long-Term Memory

LLMs do not store information between sessions unless an external memory mechanism is integrated. Every new interaction begins with a clean slate, meaning AI cannot recall prior conversations or user-specific details. This is particularly problematic for applications that require continuous learning, such as AI-powered customer support, legal compliance tools, and personalized healthcare diagnostics. Without a retrieval system to provide historical context, these use cases become impractical for standalone models.

Static Knowledge and Outdated Information

Once an LLM is trained, its knowledge is fixed. Any information developed after its last training cycle is inaccessible unless manually updated through fine-tuning. This makes AI unreliable for use cases requiring up-to-date information, such as real-time financial forecasting, regulatory compliance, or scientific research. AI models relying solely on pre-trained data risk generating responses based on outdated knowledge, which can lead to misinformation, security risks, and compliance failures.

Risk of Overwriting Knowledge During Fine-Tuning

Fine-tuning an LLM on new datasets allows it to specialize in certain domains, but this process comes with the risk of catastrophic forgetting, where previously learned information is unintentionally replaced or diluted. A legal AI updated with recent case laws may deprioritize older but still relevant precedents. A healthcare AI integrating new treatment protocols may overlook critical legacy medical knowledge. In corporate knowledge management, historical company data can be lost when models are fine-tuned for recent policies. This makes fine-tuning an inefficient method for maintaining a continuously evolving knowledge base.

Hallucination and Lack of Verified Sources

LLMs generate responses based on probabilities rather than factual correctness, leading them to fabricate information when faced with knowledge gaps. Without access to verified, real-time data, they produce plausible but incorrect answers, which can be particularly damaging in industries where accuracy is critical. A financial AI may base investment recommendations on outdated economic models, a medical AI may suggest incorrect treatments, and a contract review AI may invent legal clauses that do not exist. This lack of fact-checking and real-time knowledge retrieval makes LLMs unreliable for high-stakes decision-making.

How Retrieval-Augmented Generation (RAG) Fixes the Forgetting Problem

Large language Models are powerful, but they lack one crucial capability—the ability to retrieve real-time, external knowledge. Instead of relying solely on outdated training data, RAG bridges this gap by allowing AI models to pull in fresh, relevant information when needed. When a user asks a question, the AI first searches external sources such as databases, APIs, knowledge repositories, or real-time documents to find the most relevant data. It then integrates this retrieved information with its existing knowledge, generating a fact-based, up-to-date response that is tailored to the specific query. This process eliminates the context window limitation, reduces hallucinations, and ensures AI is always working with the most current and accurate information.

Unlike traditional LLMs that become outdated over time, RAG-powered models are dynamic and adaptable, offering real-time, verifiable information instead of relying on static knowledge. They can keep up with evolving industries without constant retraining, enhance accuracy and trustworthiness, and handle long, complex conversations without losing context. This makes RAG a game-changer in AI transformation, particularly for businesses that require AI-driven solutions with high accuracy, real-time insights, and the ability to scale across different domains. For enterprises looking to implement AI at scale, RAG isn’t just an upgrade—it’s the missing piece that makes AI truly intelligent.

Real-World Applications of RAG in AI Transformation

RAG isn’t just a theoretical fix—it’s already being used to enhance AI across multiple industries, solving real business challenges.

1. AI-Powered Enterprise Assistants

Companies integrating AI into their workflows often struggle with outdated or inconsistent knowledge bases. RAG-powered AI assistants can retrieve up-to-date company policies, past customer interactions, and historical data in real time, allowing teams to make better, more informed decisions.

2. Smarter Healthcare AI

In medicine, accuracy is critical. A RAG-enhanced medical AI can fetch the latest research, treatment guidelines, and patient history instantly, providing doctors with insights based on real-time, evidence-based data rather than relying on outdated medical knowledge.

3. Legal and Compliance Automation

Regulations are constantly evolving. A traditional LLM might give outdated legal advice, but a RAG-powered legal AI can retrieve current laws, compliance updates, and case precedents, ensuring accuracy in highly regulated industries.

4. AI for Financial and Market Analysis

Financial institutions use AI to predict market trends and assess risk, but outdated models can be costly. RAG enables AI-driven financial analysis tools to pull real-time stock data, news, and earnings reports, ensuring traders and analysts make data-driven decisions.

5. E-commerce and Customer Support

A chatbot using a standard LLM may provide generic answers, but a RAG-powered chatbot can retrieve customer-specific details, order histories, and support tickets, delivering truly personalized interactions.

By integrating RAG into AI transformation strategies, businesses aren’t just improving AI models—they’re making them smarter, faster, and more reliable for real-world applications.

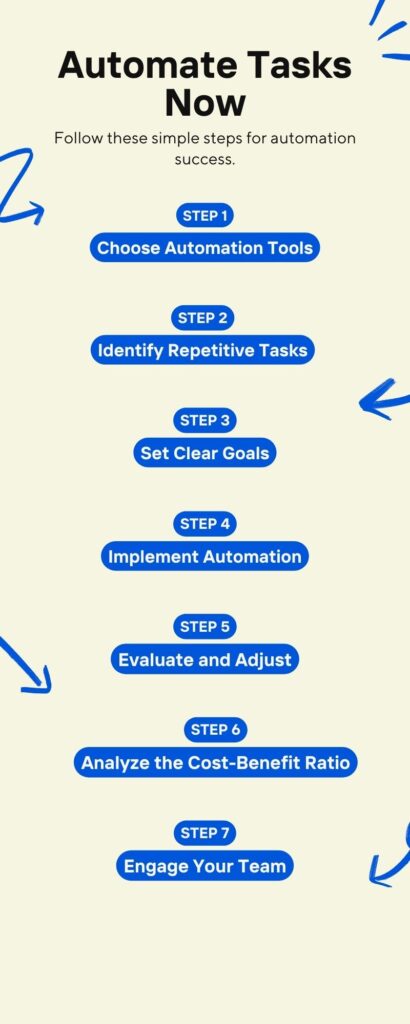

Making AI Smarter with RAG

AI models are powerful, but they struggle when they rely only on what they were trained on. Without a way to retrieve real-time knowledge, they produce outdated or incomplete answers. Retrieval-Augmented Generation solves this by allowing AI to pull in fresh, relevant data whenever needed.

AI models are powerful, but they struggle when they rely only on what they were trained on. Without a way to retrieve real-time knowledge, they produce outdated or incomplete answers. Retrieval-Augmented Generation solves this by allowing AI to pull in fresh, relevant data whenever needed.

For businesses investing in AI transformation, this is an essential shift. Whether in healthcare, finance, or enterprise automation, AI needs to provide reliable, up-to-date information rather than static responses. RAG makes AI more accurate, adaptable, and useful in real-world applications.

The future of AI belongs to those who refine it, not just scale it. Smarter models will drive innovation, and businesses that adopt RAG today will set the standard for AI-driven solutions.

At Code District, we help companies build AI that retrieves, understands, and applies knowledge effectively. If you are ready for AI that delivers real value, let’s build it together.